Grafana import multiple dashboard at once

Importing a dashboard into Grafana is easy. However, when you want to import multiple files at once, it becomes really difficult because Grafana does not have the feature to bulk import or bulk export at once.

Before running the script, open the script file and update the HOST and API_KEY variables.

If you don’t know how to create API_KEY, please read the Grafana bulk export at once post, where I have explained how to generate API_KEY.

The script reads all files from the directories and subdirectories and imports them into the Grafana host we have defined.

#!/usr/bin/env python

"""Grafana dashboard importer script."""

import json

import os

import pathlib

import requests

HOST = "http://localhost:3000"

API_KEY = (

"eyJrIjoiOTU1YnhEZTNGNUNCQTdxNjNNbjN3d2NyemhqdzQyNDgiLCJuIjoiYWRtaW4iLCJpZCI6MX0="

)

USERNAME = os.getenv("IMPORT_DASH_USERNAME")

PASSWORD = os.getenv("IMPORT_DASH_PASSWORD")

DIR = "dashboards/"

# http://localhost:3000/api/dashboards/db

def main():

for subfolder in os.listdir(DIR):

if os.path.isdir(os.path.join(DIR, subfolder)):

for file in os.listdir(os.path.join(DIR, subfolder)):

print("started reading file from dir %s" % (subfolder))

importDashboard(file, subfolder)

if os.path.isfile(os.path.join(DIR, subfolder)):

print("started reading file %s" % (subfolder))

importDashboard(subfolder, "")

def importDashboard(file, subfolder):

f = open(os.path.join(DIR, subfolder, file), "r")

dash = json.load(f)

f.close()

headers = {"Content-Type": "application/json"}

auth = None

if USERNAME is None:

headers["Authorization"] = "Bearer %s" % (API_KEY,)

else:

auth = (USERNAME, PASSWORD)

# ids = set()

# if 'panels' in dash:

# for panel in dash['panels']:

# id = panel['id']

# if id in ids:

# print("Duplicate row id %d in %s" % (id, file))

# exit(1)

# ids.add(id)

dash.pop("id")

dash.pop("uid")

# print(subfolder)

data = {"dashboard": dash, "overwrite": True, "folderUid": subfolder}

# print(json.dumps(data, sort_keys=True, indent=4, separators=(',', ': ')))

r = requests.post(

"%s/api/dashboards/db" % (HOST,), json=data, headers=headers, auth=auth

)

if r.status_code != 200:

print(

"error while importing dashboard %s error code %s and reason %s "

% (file, r.status_code, r.content)

)

else:

print("Dashboard %s successful imported" % (file))

def readFile(file):

f = open(file, "r")

dash = json.load(f)

f.close()

return dash

if __name__ == "__main__":

main()

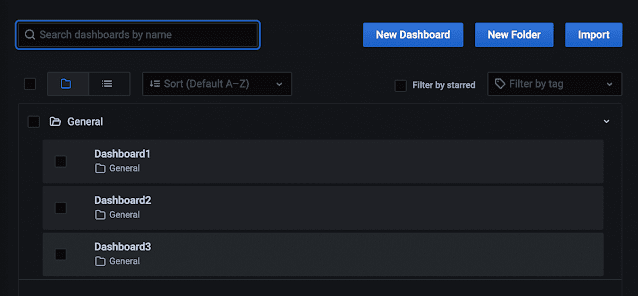

By default, the dashboard we are importing will be saved in the general folder in Grafana.

Open Grafana and you will see our imported dashboard inside the general folder as shown below.

One disadvantage of the above script is that all our dashboards will be stored in a general folder.

Bulk import with folder stricture

If you want to create folders dynamically in Grafana when importing a dashboard, then use the below script.

Create a folder and place all the dashboards you want in it, then run the script.

Now the script will create a new folder (with the same name in the file system) in Grafana if one does not exit and store the folder ID in an array to reduce the number of API calls.

#!/usr/bin/env python

"""Grafana dashboard importer script."""

import json

import os

import pathlib

import requests

HOST = "http://localhost:3000"

API_KEY = (

"eyJrIjoieGRBSXBZWTMxaDk3eWZzRGJPazRDRnQxWDl3TlNEQ3kiLCJuIjoidGVzdDEwIiwiaWQiOjF9"

)

USERNAME = os.getenv("IMPORT_DASH_USERNAME")

PASSWORD = os.getenv("IMPORT_DASH_PASSWORD")

DIR = "dashboards/"

createdFolderNames = {"General": 0}

# http://localhost:3000/api/dashboards/db

def main():

for subfolder in os.listdir(DIR):

if os.path.isdir(os.path.join(DIR, subfolder)):

for file in os.listdir(os.path.join(DIR, subfolder)):

print("started reading file from dir %s" % (subfolder))

importDashboard(file, subfolder)

if os.path.isfile(os.path.join(DIR, subfolder)):

print("started reading file %s" % (subfolder))

importDashboard(subfolder, "")

def importDashboard(file, subfolder):

f = open(os.path.join(DIR, subfolder, file), "r")

dash = json.load(f)

f.close()

headers = {"Content-Type": "application/json"}

auth = None

if USERNAME is None:

headers["Authorization"] = "Bearer %s" % (API_KEY,)

else:

auth = (USERNAME, PASSWORD)

ids = set()

if "panels" in dash:

for panel in dash["panels"]:

id = panel["id"]

if id in ids:

print("Duplicate row id %d in %s" % (id, file))

exit(1)

ids.add(id)

dash.pop("id")

dash.pop("uid")

folderuid = createFolderInGrafana(subfolder, auth, headers)

# print(folderuid)

# print(subfolder)

data = {"dashboard": dash, "overwrite": True, "folderId": folderuid}

# print(json.dumps(data, sort_keys=True, indent=4, separators=(',', ': ')))

r = requests.post(

"%s/api/dashboards/db" % (HOST,), json=data, headers=headers, auth=auth

)

if r.status_code != 200:

print(

"error while importing dashboard %s error code %s and reason %s "

% (file, r.status_code, r.content)

)

else:

print("%s successful imported" % (file))

def readFile(file):

f = open(file, "r")

dash = json.load(f)

f.close()

return dash

def createFolderInGrafana(foldername, auth, headers):

global createdFolderNames

if foldername not in createdFolderNames:

data = {"title": foldername}

r = requests.post(

"%s/api/folders" % (HOST,), json.dumps(data), headers=headers, auth=auth

)

if r.status_code != 200:

print(

"error while importing folder %s error code %s and reason %s "

% (foldername, r.status_code, r.content)

)

else:

print(

"Grafana Folder created %s and Uid %s " % (foldername, r.json()["id"])

)

createdFolderNames[foldername] = r.json()["id"]

return r.json()["id"]

return createdFolderNames[foldername]

if _name_ == "_main_":

main()

Once the script runs completely. Open Grafana and see the folder structure; it will be the same as our file system we created.